Public Projects

Globes: Explore Historical Maps With the Apple Vision Pro

2024 - Present

In collaboration between our team at Monash University and the David Rumsey Map Center at Stanford, we've launched the Globes app. This application allows users to explore detailed reproductions of historic maps, with the ability to scale (up to 5 meters), rotate, and freely position. The app aims to offer a novel way for users to engage with the David Rumsey map collection.

Outputs:

Read more about the app.

Download the Globes app from the App Store.

Eduard: Swiss-Style Relief Shading for Maps Using Machine Learning

2019 - Present

Eduard is designed to help cartographers create beautiful shaded reliefs for terrain maps. It uses machine learning (ML) models to create accurate Swiss-style shaded reliefs, which can be customised to adapt to your terrain.

Outputs:

Read more about our research and the app.

Publications: NACIS 2022, AutoCarto 2022 & IEEE InfoVis 2020

Visually Similar Artworks

2018 - 2019

Using convolutional neural networks + approximate search on embeddings to discover visually similar artworks; read about how it works here

Outputs:

Art or Not: a fun little app that enables serendipitous exploration of over a 100,000 artworks, with an art critic twist (SensiLab write up).

Camera Obscurer: Generative art for design inspiration (EvoMUSART 2019)

Art Around You: Playful Exploration of Online Gallery Collections (NeurIPS 2018 - Creativity Workshop)

Big Earth Listening

2019

An interactive virtual acoustic installation for MPavilion 2018-19 season. The installation consisted of:

4 hydrophones buried around the pavilion.

Python server that synced up the live feeds and streamed all 4 channels with minimal latency

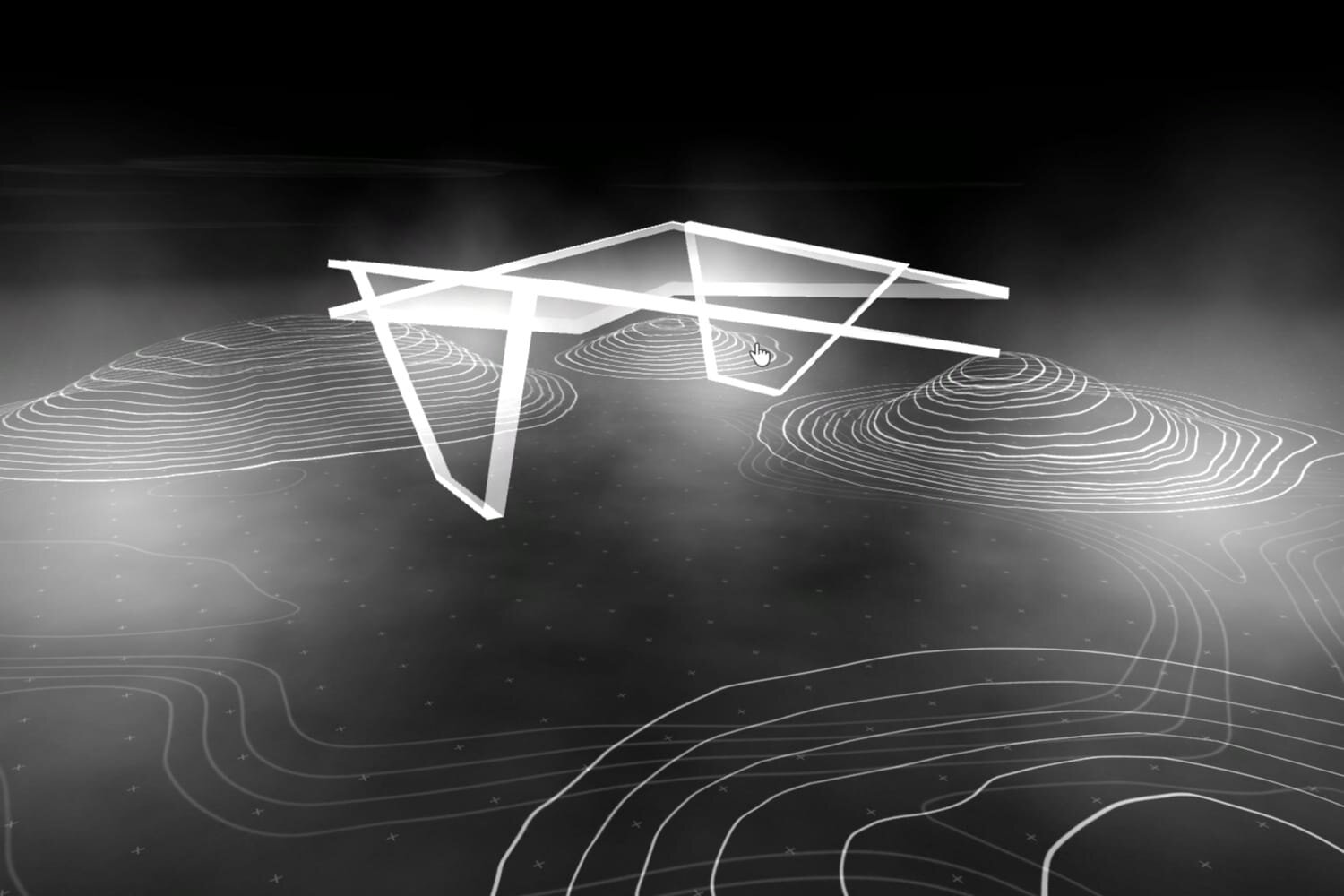

An accurate (but abstract) 3D representation of the pavilion using Unity

Read more here, or see the SensiLab write up.

Watch a YouTube video or play with the interactive recorded tour.

Marble Mirror

2018

Marble Mirror is a real-time interactive system that acts as a kind of a generative funhouse mirror. The system is made up of two parts: a facial landmark detector and a generative adversarial network (GAN).

The motivation behind the idea was to bring gallery exhibitions to life through playful interactions.